There are more Activation Functions in Deep Learning and Machine Learning ,with the help of many libraries and framework these activation functions can be used but we don't know how it can be coded Mathematically

In this post i am going to show how it can be coded Mathematically for all the mathematical function

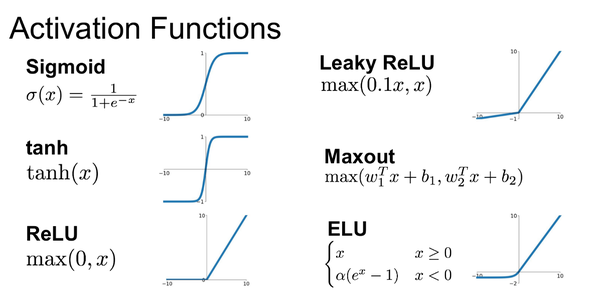

List of Activation Function available

- Sigmoid

- Relu(Rectified Linear Unit)

- leaky Relu

- Tanh or hyperbolic tangent Activation Function

SIGMOID

Pythonic Way of representation

def sigmoid(z):

return 1 / (1 + np.exp(-z))

RELU(Rectified Linear Unit)

Pythonic Way

def ReLU(z):

return z* (z > 0)

LRELU(Leaky Rectified Linear Unit)

Pythonic Way

def dReLU(z):

return 1. * (z > 0)

TANH

def tanh(z):

return (2/(1 + np.exp(-2z))-1

The above notes only contain the most important Activation functions which we used for any kind of machine Learning Model or Deep Learning Model.

You can follow me personally on….

LINKED-IN: https://www.linkedin.com/in/vpkprasanna/

GITHUB :https://github.com/VpkPrasanna